Both support a wide variety of use cases with diverse characteristics. Presto and Trino are open-source distributed query engines running on a cluster of machines.īoth Presto and Trino were designed to be flexible, and extensible. Athena makes it easy for anyone with SQL skills to quickly analyze large-scale datasets and is a great choice for getting started with analytics if you have nothing set up yet. Just point it at the data and get started for $5 per terabyte scanned.

If you are reading this blog, you are seriously considering implementing a data lake architecture or have already figured out that you need to use PrestoDB or Trino (AKA PrestoSQL) to support interactive analytics use cases and data-driven culture.īut should you start with a simple solution like Amazon Athena, which is based on Presto - or manage your own PrestoDB or Trino clusters? To make an informed decision, the first step is to understand the pros and cons of each solution, as well as what they offer in the context of your specific use cases, customer requirements and SLAs, data sets, and queries.Īmazon Athena is a serverless query service, providing the easiest way to run ad-hoc queries for data in S3 without the hassle of setting up and managing clusters.

#DBEAVER ATHENA HOW TO#

I think this is an important topic as secure access to Athena from R will be needed as soon as a production setting is considered.While both platforms are used to extract value off massive amounts of data in the data lake, Athena and Presto / Trino have benefits and drawbacks for different use cases - this post will highlight when and how to extract the most value of each. I also tried the dbConnect() command with aws_credentials_provider_class = ".EnvironmentVariableCredentialsProvider", but I had no success, either. Sys.setenv( AWS_SESSION_TOKEN = session$SessionToken ) Session = get_session_token( id = 'arn:aws:iam:::mfa/', Dealing with fetchSize internallyĪws.signature::use_credentials( profile = 'research' ) When i'm back from vacation i'll gladly join in here with any PRs/help/etc.

I was also going to head down a path of dplyr integration, but one thing that needs to be taken care of for that is the schema naming inclusion and i'm not sure it's worth the effort in the short term.

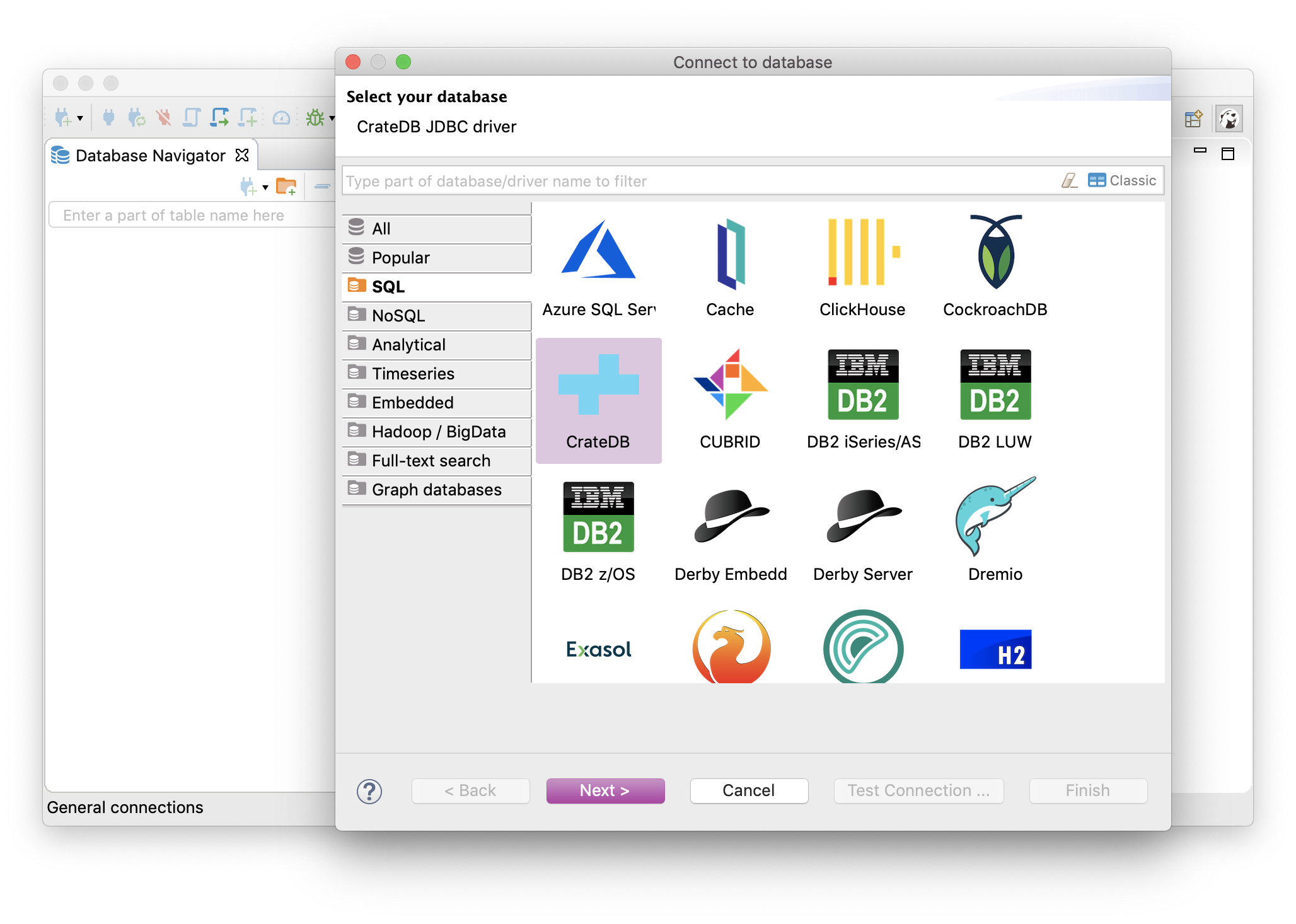

#DBEAVER ATHENA DRIVER#

I'd suggest making (i was going to do this, too) either an athenajars pkg and keep it updated with the latest aws athena jar or an explicit message on failure to find driver paired with a downloader function.

#DBEAVER ATHENA FREE#

Feel free to steal as little or as much from my scant populated nascent pkg start as you like.ĬRAN is going to have a hissy fit abt the auto-dl of the driver. it should also, likely, have support for non-default connection parameters (for some stuff at work I need to change some of them, hence my inclusion). Package naming bias aside (it rly doesn't matter what the pkg is called) i do think the auth needs to be a seamless part of the pkg since there are so many ways to do so with AWS. You folks jumping in here to fill a need is ++gd. Maintaining my Apache Drill wrapper pkg is plenty of work on it's own. i have no particular need to be the keeper of an Athena pkg (but i'd lobby for a quirky name like the greek god who was athena's helper :-). I had started a cpl weeks ago since one of the more overtly gnarly bits of athena is the auth (if you're not doing basic creds). This is just one anecdote, and he is writing about the 1.x version of the driver, but it may be worth exploring.

#DBEAVER ATHENA DOWNLOAD#

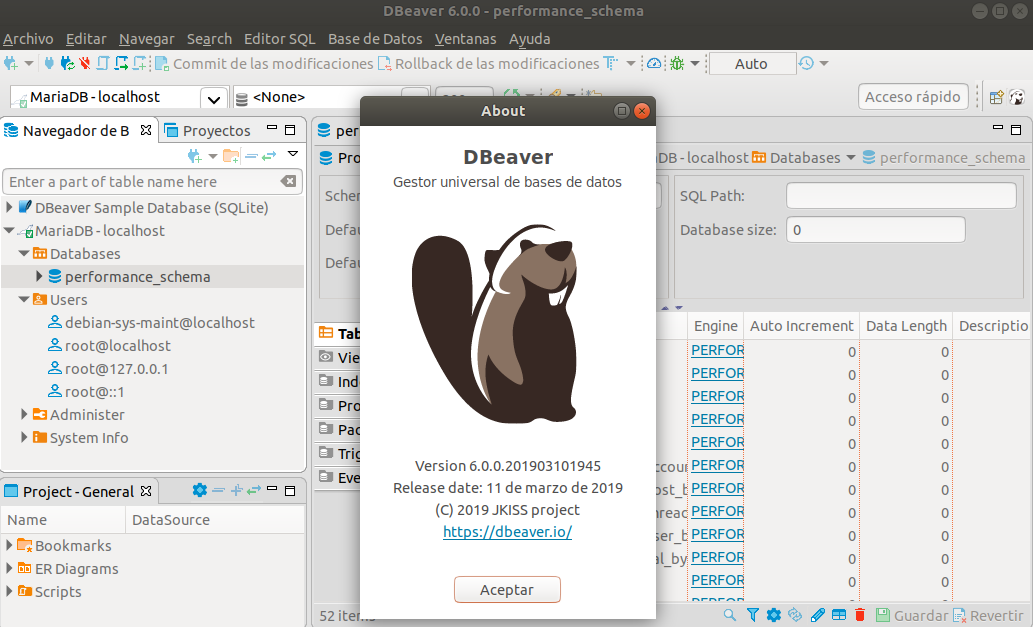

I am now thinking a better strategy is to query the metadata then start the Athena query asynchronously, poll it until completion and then download the csv file directly from the s3 staging directory and combine with metadata for correct types. To do the same operation via the JDBC driver takes over 2 hours. I can then download it to my local machine in less than a minute. The Athena query and csv file to S3 completes in less than 2 minutes. I did a test with a single table of 15m rows. When you run a query with the AWS Athena console, a results csv is written very quickly to S3. So I've been doing some googling about PyAthenaJDBC while trying to triage #16, came across this by have tested with various clients (Tableau, DBeaver, and basic Java app) and retrieving data is a lot slower than it should be.

0 kommentar(er)

0 kommentar(er)